Demystifying Web Scraping: How to Extract Data from Complex Websites

Web scraping has become an essential skill for data analysts, researchers, and developers in various fields. It involves extracting data from websites and storing it in a structured format for analysis or use in other applications. In this tutorial, we will learn how to scrape a complex website using Scrapy, a Python-based web scraping framework. Our target website will be www.walmart.ca, a popular e-commerce website in Canada. By the end of this tutorial, you will have the skills and knowledge to scrape any website using Scrapy and handle complex website structures. Let’s get started!

I. Introduction

While learning to scrape simple websites like https://quotes.toscrape.com/ is a good starting point, many beginners struggle with applying their skills to real-life websites that clients require. This is because such websites often contain complex features and structures that require additional training to handle. In this article, we will explore how to scrape a complex website, www.walmart.ca, using Scrapy. As a popular e-commerce website in Canada, it presents various challenges that provide valuable learning opportunities. Additionally, I recently worked on a project for a client involving this website, which inspired me to share my insights on how to effectively scrape complex websites.

II. Understanding the Website Structure and Data Sources

Before diving into web scraping, it’s important to ensure that you can access the target website. Some websites have geographic limitations that may prevent you from accessing them. For example, when I try to access loblaws.ca from my location, I receive an “Access Denied” message.

To overcome this, one approach is to use a VPN to connect to different locations. Canada and the United States are popular options to consider, based on the website’s extension or popularity. However, this may involve a trial-and-error process. In my case, using a VPN helped me bypass the restriction. This is one of the many techniques you will learn in this article to effectively scrape complex websites.

III. Selecting a Web Scraping Tool

IV. Preparing Your Web Scraping Environment

Now that we have introduced the topic of web scraping and discussed the benefits of using Scrapy, it’s time to start analyzing complex websites like Walmart. But before we can do that, we need to set up our environment.

Fortunately, this is a straightforward process that involves navigating to the required directory, creating a virtual environment (if needed), and running the command “pip install scrapy“. Once the installation is complete, we can create a spider for Walmart using the command “scrapy genspider walmart x“.

If you require more detailed explanations on how to set up your environment, there are plenty of resources available. However, for the purpose of this article, we will focus on learning how to effectively scrape this complex website.

How to create virtual environments

V. Scraping Data from the Website

With all of that out of the way, let us Try to scrape https://www.walmart.ca/browse/grocery/10019

We will like to extract the following details from the page

– product name, product description, product thumbnail, URL, brand, price

I will use a lot of pictures to try to explain my workflow. I hope you can bear with me 🙂

First, let us try to access the website from scrapy shell (A useful way to inspect and work with a website without creating the spider yet)

Run the command scrapy shell https://www.walmart.ca/browse/grocery/10019 from terminal.

Yes, we get a bunch of information. The one I am most interested in is this 2

2023-02-25 19:28:35 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (307) to <GET https://www.walmart.ca/blocked?url=L2Jyb3dzZS9ncm9jZXJ5LzEwMDE5&uuid=36f97b43-b53a-11ed-9896-766475744e4b&vid=&g=b> from <GET https://www.walmart.ca/browse/grocery/10019>

2023-02-25 19:28:35 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.walmart.ca/blocked?url=L2Jyb3dzZS9ncm9jZXJ5LzEwMDE5&uuid=36f97b43-b53a-11ed-9896-766475744e4b&vid=&g=b> (referer: None)

and particularly this

DEBUG: Redirecting (307) to <GET https://www.walmart.ca/blocked?url=L2Jyb3dzZS9ncm9jZXJ5LzEwMDE5&uuid=36f97b43-b53a-11ed-9896-766475744e4b&vid=&g=b>

That’s right. We are redirected to a page to prove we are not a bot. How did I know this?

Well, the URL, say redirecting to Walmart bla bla bla /blocked

Another way to check this is to view our response in the browser.

let’s run view(response) to see our response on the webpage

Yep. That confirms that our bot was blocked.

One way to try to get a successful 200 response is to try to mimic the ways of a real browser.

One way to solve the problem of being blocked is to add some headers to the request.

Let us go to our browser and get that.

Launch the developer tools, (right-click, and click on inspect), then switch to the network tab.

paste the Walmart URL and hit enter

Notice there are multiple requests shown there. The one we are interested in is the one that fetched that page for us.

Most likely the first request. You can confirm by clicking on the request and clicking on the preview. You should see the same page you are seeing on your screen.

Now, why don’t we copy the headers from there?

Right-click on the request and choose copy as curl (bash). From there, head to https://curlconverter.com/ and paste what you copied. You should get the headers to copy

Now that we have our headers in place, let us head back to the terminal. In the same terminal, you left, paste the contents(headers).

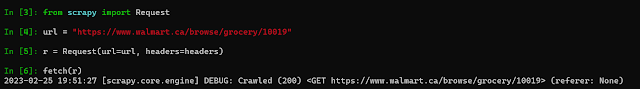

Secondly, we need to import the Request from scrapy. So run the command “from scrapy import Request“

Commands to run

from scrapy import Request

url = “https://www.walmart.ca/browse/grocery/10019”

r = Request(url=url, headers=headers)

fetch(r)

Oh, look at that. A 200 response :). Great. You just saw one way to bypass blocking by adding headers to the request object.

Now that we have out category page, let us go ahead and get out data. How about we start by selecting products to see how many we have. Let us create a selector

“#product-results >div” looks like a good selector. From the browser, we see 21 products. Let us try from the terminal to see how many we get.

We got 15. Well, if you take a look at the website again, you will notice some products have the text “sponsored”. This can mean that they are loaded from another source or through some javascript. (Hint, They are embedded in a javascript code in the site source. We will look at this in another article)

We can then get this JSON data out of the script tag using any suitable method. In this case, we can use the selector

It simply means: Look for a script whose text contains the word “window.__PRELOADED_STATE__=“

Then apply the regular expression r’window.__PRELOADED_STATE__=(.*);$’ Which in essense gets the text after window.__PRELOADED_STATE__= right up will a “;”

This pattern is common in most sites and that is why I have taken the time to explain.

We can then format the data using the json module as follows

data = json.loads(data)

products = data.get(“results”).get(“entities”, {}).get(“products”, {})

This Json data already contains some useful information that we can collect. So just for learning purposes, I will collect them from here using the code block below.

Let me explain what is happening there. We have collected product names, descriptions, and ids, stored them in a variable “base”, and constructed a product URL. Then we pass the base down to the next request – the product details page using the meta parameter.

Another way is just to use a simple selector to get the page URL for the products and visit each one of them

I show the two here because, on some websites, you won’t be able to get the data via a selector, even though it is embedded in the script. In that case, method one will do the job. However, if a selector returns the URLs then it is the shorter and easier way to go.

Now, our challenge is to get the price and the images. Notice that if we try to use a css selector to get images or the price, we won’t succeed. This is probably because the data is loaded later by javascript (which scrapy does not render)

So how do we go about this?

Well, there are two things here. With the images, the data is embedded in the page source as we saw previously for products. However, the price is loaded from an API. More on the price later. Let us begin with the images.

If we copy the URL of the image and do a quick search in page source (Go to page source by pressing ctr + u or right-click and choose view page source. The page source is what we get without any javascript rendering ), then we will find that we have a match. Again, if you notice, it is embedded in a scrip tag sp we can extract it in a similar manner

One way I visualize the JSON data is by using the online tool https://jsonformatter.curiousconcept.com/#. By copying the json contents from the page source and formatting on this site, I can easily fold regions of JSON that are not necessary. As a beginner, it will be difficult to tell where the json starts and ends, as it is just junk text. However, with time, you will get used to it. The general rule is that it starts with a “{” and ends with a “}”

Now, let us see how to get the price.

We already tried some CSS selectors but they did not work. Now let us try a simple search of the price in the page source

Nothing still. This means the price is surely gotten from an API. This means we will have to find and mimic this request to get the price.

One way will be to open the developer tools, (right-click and click on inspect), then switch to the network tab.

Now tick the options to preserve the log and to disable caching

Clear the logs and refresh the page. Once that is done, now do a quick search of the price in the network tab (PRESS ctr + f while the focus is on the network tab).

Now, we can search for the price or related keywords eg “price”

Now we will get a bunch of requests to glance through.

Notice the one with the title “Price-offer” made to https://www.walmart.ca/api/product-page/v2/price-offer. This looks like what we are looking for. So we have to mimic this request.

and click continue. Now you should be able to see what request is made, the form data, the method, and any other required data you need to send to get a response.

Put that all together and mimic the request

The complete code can be found here https://github.com/nfonjeannoel/web_scraping_complex_websites/blob/main/walmart.py

Now you just need to parse the response, put everything together and yield the data.

VIII. Conclusion

That is it about scraping complex websites part one. This is already getting long, so I will split it into sub-articles. We are still left with a couple of things to do

- Collect sponsored products

- collect products loaded when we scroll down

- handle pagination

- optimize to make less than half the total number of requests we are currently making

- talk about blocking